Our article “Multimodal representations of biomedical knowledge from limited training whole slide images and reports using deep learning“, Niccolò Marini et al., has been published in Medical Image Analysis.

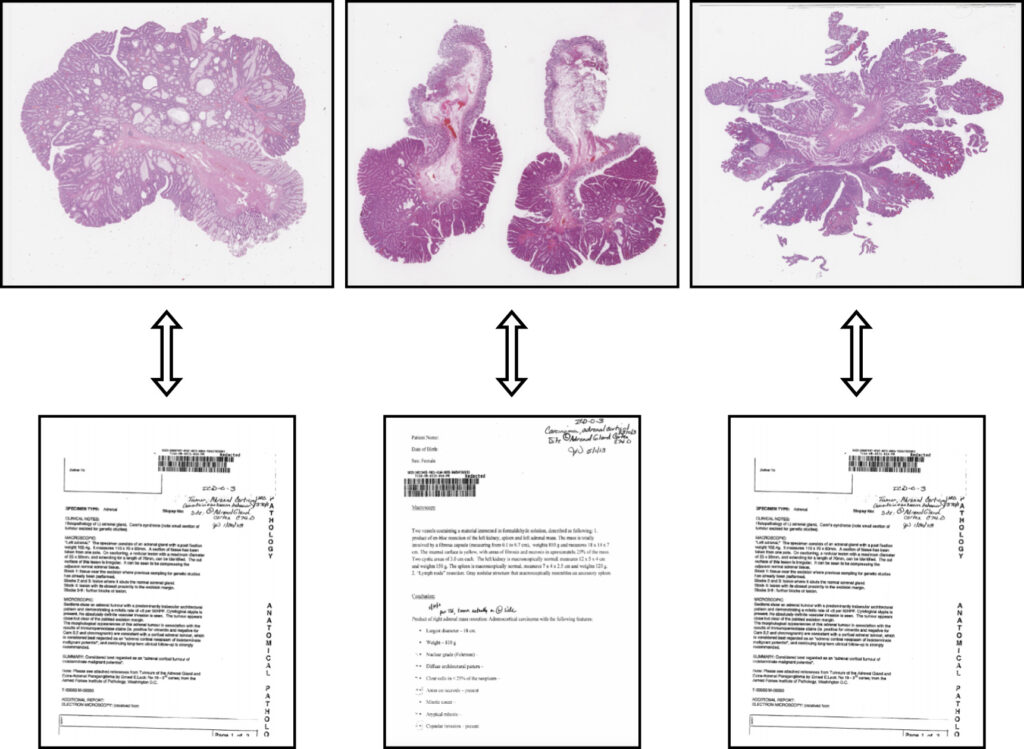

In this work, we developed a novel multimodal learning architecture that integrates images and reports, offering a robust new approach to histopathology data representation. This model can be used as a powerful backbone to solve various computational pathology tasks.

The multimodal data representation outperforms unimodal representations in terms of whole slide image (WSI) classification, making it possible to exploit smaller datasets to train effective networks.

The combination of unsupervised learning methods allows it to learn similarities and dissimilarities between images and reports. The model effectively represents medical ontologies through visual knowledge, creating meaningful connections between the visual information in images and the textual details in reports. This integration opens up new possibilities for enhancing the accuracy and efficiency of computational pathology, which can contribute to better patient outcomes and advancing the field of medical research.